Are you a student interested in a career using digital skills?

Assess your skills today and what you might need to be successful in your chosen career. It takes 15 minutes and you receive a free report that you can reference for years to come. We even give you FREE online training for managing your digital career.

When trying to compare tools or approaches to assessments, interviews etc., sometimes the question comes up about the difference and benefits of ‘objective’ vs ‘subjective’. Unfortunately some of the responses and content on this subject are quite misleading, and often the wrong questions are asked.

Definitions

First, let’s start with understanding the definition of these terms:

Based on or influenced by personal feelings, tastes, or opinions.

‘his views are highly subjective’

Contrasted with objective

‘there is always the danger of making a subjective judgement’

(of a person or their judgement) not influenced by personal feelings or opinions in considering and representing facts.

‘historians try to be objective and impartial’

Objective tests are measures in which responses maximize objectivity, in the sense that response options are structured such that examinees have only a limited set of options (e.g. Likert scale, true or false). Structuring a measure in this way is intended to minimize subjectivity or bias on the part of the individual administering the measure so that administering and interpreting the results does not rely on the judgment of the examiner.

Are we assessing Skills or Knowledge?

A few years ago I looked at all the existing SFIA tools available at the time, planning on selecting the best tool and using that for our customer projects. However, I found significant issues with all of them which didn’t support good practice in using SFIA and therefore wouldn’t deliver in the best way for our customers. One of these issues relates to how the tools support skills assessment. The tools marketed as using objective testing, are actually only testing ‘knowledge’ – which means they don’t meet our customer’s need to assess skills and experience. We already suffer from an overreliance on exams, qualifications and certifications that often only demonstrate knowledge and understanding rather than experience.

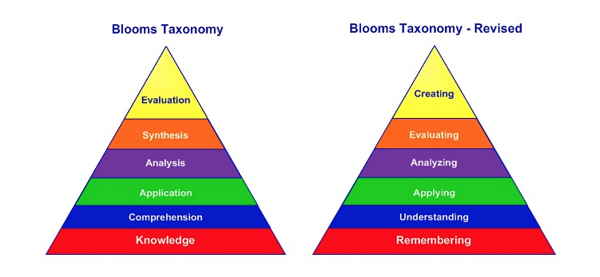

Bloom’s Taxonomy is used extensively in the education sector, and explains this quite well. The objective test solutons that I’ve seen are testing level 1 (Knowledge / Remembering) and sometimes level 2 (Comprehension / Understanding) of Bloom’s Taxonomy. When we assess someone using SFIA, we are focussing on level 3 (Application / Applying) – a fully developed skill which requires the application of knowledge and understanding, and the building of competence and experience. Levels 1 and 2 tell you that someone might be able to do something in theory, but assessing against level 3 is checking that they ‘have’ done it – proven capability and experience, over theory!

Many of the tools only offer a binary response – “do you have the skill or not, yes or no”.

As a result of the tools research, I concluded that none of the existing tools met our customer needs or adhered to recognised international standards on assessment methods, and therefore I started designing a new solution – which became our SkillsTX SaaS solution. The design of this tool was a collaboration, combining best practice in how to assess, with extensive experience of using SFIA. We wanted to make the self-assessment element much more objective, and align with best practices and international standards for assessment, by using a graduated scale of how well the SFIA description fits the individual’s working experience – so that’s exactly what we did. This fits the reality we find in the working world – it isn’t a binary choice between having the skill or not having the skill. Often people have experience which provides only a partial match to the SFIA description, so have partially developed the skill, and therefore need assessment answer options which recognise this and thereby leave room for action in their development plans. The process needs to be fair, stand up to scrutiny, and support the individuals in getting the most accurate and complete profile of their skills. This including the need to allow people to capture skills from previous roles, and many of the tools only assessed the skills of the current role. See my previous blog on assessment approach

Red Herring

Focussing on the difference between subjective vs objective testing, when the testing being carried out is purely on someone’s knowledge, risks wasting time. The first thing to decide is ‘what’ you are trying to assess. This issue is one of the biggest problems in the technology-based professions, where there is too much focus on ‘what you know’ (theory or knowledge) rather than ‘what you have done’ (proven capability).

Once you have agreed what you are assessing (which I hope will be ‘skills developed’ and ‘proven capability and experience’), then ensure the assessment approach is increasing the objectivity – as it’s not often a binary choice between ‘subjective’ vs ‘objective’! Self-assessment is always going to run the risk of being subjective, but objectivity can be increased by the way the information and questions are presented, how the questions are asked, the answer options, the analysis, and the follow-up actions such as endorsement, certification, and development planning between managers and employees.